Apologies for being offline for quite awhile, my weeks turned out to be busier than expected and I've been collecting quite a lot of stuff to share with you all :) Many thanks to JQ for his recent posts on all things geeky and awesome.

This time, we're going into sci-fi stuff and it's about Quantic Dream, the French video game developer behind the groundbreaking interactive movie video game "Heavy Rain" (and also "Omikron: Nomad Soul" and "Indigo Prophecy"). Interestingly, they've been trying to recreate real life experiences in their games ever since MMX was the cream of the crop for computer processors (I'm old), and they've come a long way from their roots.

Lo and behold, Quantic Dream recently released a new PS3 developer demo titled "Kara", which demonstrates that the PS3 is still able to produce awesome graphics despite its age:

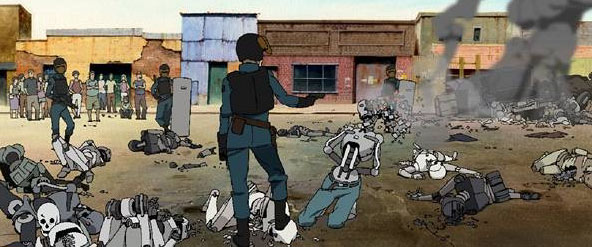

What strikes me about "Kara" is its exploration of one of the most prominent hot button topics in the realm of sci-fi: the human/robot dichotomy. Thoughts about the Terminator, Blade Runner, the Matrix/Animatrix, I, Robot, A.I., and Battlestar Galactica would surely come to mind in some of us...

|

| Thinking about Animatrix's "Second Renaissance" still gives me the creeps. |

A discussion on this topic can go on forever, but what I've found is that back in 1970...a Japanese robotics professor, by the name of Masahiro Mori, coined the term "The Uncanny Valley" to describe human responses to objects that lie within the spectrum of human likeness from an industrial robot to a healthy person.

|

| Masahiro Mori, the man who coined "The Uncanny Valley" in a time long long time ago... |

Mori actually got his inspiration from a 1906 essay written by Ernst Jentsch titled "On the Psychology of the Uncanny", who tried to describe how people's moods in uncertain events. In particular, Jentsch touched a little on the added emotional effect of storytelling is achieved by placing an automaton instead of a human character.

The graph below illustrates the main point of Dr. Mori's essay, that familiarity rises gradually until it takes a sharp dive at the zombie/corpse stage, before rising up to a healthy person's level. Falling sick and dying actually brings a person towards the bottom. I've added a picture of a bunraku puppet for illustration (if you ask me, a bunraku puppet looks rather creepy, like the Puppeteer from Saw).

I guess it helps to put a name on that creepy feeling I get from seeing robots/automatons that resemble humans, and the fear that comes when robots become 99.999% human. For one, I am unsure if we will see the day when robots will completely resemble humans, but I will be a fool to say that will not happen at all. A Japanese school has already started to try out Saya, a human-like teacher that is capable of facial expressions and calling out students's names.

Looking at how much science has advanced so fast over the fast few hundred years, and seeing how robots today are starting to look a lot more human than before...we can be in for an interesting time. Science fiction is indeed slowly becoming scientific reality...

A very well written and interesting article written Joshua. The human/robot dichotomy debate has been one that has been discuss and largely inconclusive on how we are suppose to deal with a true Android of Superior human like AI.

ReplyDeleteThe question we must ask is that if an Android pursue it's right to autonomy and freedom, do we give it? DO we see it as human now?

it is perplexing especially when we were the one that gave it thought.

A very tough question to answer RedChina. I guess we must seek our morality and conscience on this matter. I say we should give the robots or androids their freedom.Then again, what was the purpose of building and creating an AI Android that simulates the human mind and features?

ReplyDeleteA part of me still feels that they are but appliances or tools created for the greater good of mankind.

Wow, A very sophisticated and heavy topic. Joshua always never fails to impress me with his deep thinking articles. So here is my two cents worth on the topic. It is only right to display our morality and conscience to an entity that can think, FEEL and work on its own.

ReplyDeleteAs such, should we enter the new boundary of being able to create a being that can think and feel like a human, we should have the conscience of giving it the right to do what it WILL.

If we have the capability to create something, knowing full well it will be able to have self-awareness, emotions, the ability to feel pain and think, we must accept the responsibility of the probability that such an entity will have the choice to not be subjugated under the will of us to use it as merely a tool.

Of course, there is much more to elaborate on this matter, but on the surface level, should we develop such an AI, we must be prepared for all consequences of our actions. If not, we should not even give the robot/android the ability to feel pain, be self-aware with no fail safe.

That's all I can say for now.

Very relevant point Jiaqi. But it is hard to be able to keep track of that fundamental principle if Androids commit acts of violence on humans. Remember, what sparked the War between Robots and Humans in the Animatrix was the murder of a family by a Robot.

ReplyDeleteWhat happens then? Excuse me for my lack of faith on humanity but I, myself would want retaliation. Mass shutdown of Androids.

And then, we think in the Android's perspective. especially those who are self aware and able to think. If the humans can treat us impunity, who is to say I am not endangered?

It is a very deftly issue that I feel, only when presented upon us, can we know how to react.

Wow, Epic article.

ReplyDeleteMy thought on ti is that should something happen as brought up by Jake, we have the power and ability to switch back to a fail safe, unless we have come up with a way for robots and androids to replicate without the dependance of a Human.

In the Terminator issue of Skynet taking over, I guess the safety control would be to always have a human controller to authorize the actions of releasing WMDs.

If we enter this new frontier, we must have measures to control it, or all could turn to chaos.

Using Asimov's Three laws?

ReplyDeleteBut I must commend on the great discussion within our midst. It does bring me great wonder whether within this lifetime, will we be able to see such an AI capable of complete self awareness and independent learning.

It is funny how IRobot showed how that even with Asimov's Three Laws, that shit can still hit the fan. =P

DeleteI personally feel that we still have along way to go before formulating some program that enables itself to be aware of its own existance a.k.a Self awareness.

ReplyDeleteI do have similar sentiments to Jiaqi on how we should deal with Androids.

All in all, Human's fear usually emerge from the fear of the unknown. We do not know what to expect should the circumstances of a human like Android become a reality.

ReplyDeleteWe have also been indoctrinated by all this Robots kill humans and take over the world theory, it gives us that whole mindset that it WILL happen and that fear breeds inside us. (I am not saying it WILL never happen, but I like to believe that the Genius minds that create an open source would have safety measures)

Great Nerd blog you guys have here!

ReplyDeleteI think this article has a pretty awesome discussion on the robot human relationship. hopefully, the continous debate and discussion will enable us that should we have the capability to create humanoids that can be self aware, feel emotion and have a will of its own that we will be able to handle it as humanly and morally correct as possible

I wonder what is Joshua's take on this.

ReplyDeleteNot too long ago, he posted something on animatrix if I remembered correctly.

I will still reassert myself that should a occurrence of a robot murdering its owner's would be the same as a malfunction of a factory machine causing casualties. But because we somehow humanize it and build it to simulate how humans are, it seems like an act or intention to murder than a glitch or a error that needs to be rectified.

I know I might be opening Pandora's box for discussion here but like Joshua pointed out about Mori's The Uncanny valley graph involving familiarity to humans based on human likeness. This familiarity effect can also be applied to another axis involving affiliation to humans. The act of killing an ant and a puppy are identical acts, but because we deem to have a closer affiliation to a puppy due to its cuteness or connection we have to it, we express outrage should one blatantly murders a puppy compared to the same action done by an ant.

So should we be ashamed or question our morality if say a malfunction of a robot happens resulting in lost of lives which we take action to terminate all self aware Androids? I feel that shouldn't be the case.

I might have come on a strong point on this..but I do admit that it is still a very gray topic to touch on on what is the proper ethics in dealing with it.

It is a very gray gray line in what we define ethical or not. If it were a simple machine, noone would raise the bell on shutdown the whole product line if there was a fatal malfunction but because we humanize a Machine to be more human like, we are compelled to show it our humanity.

ReplyDeleteOf course, then again, to make it feel emotions and think for itself, we are playing GOD and creating a new lifeform of sorts. So i'd say this, "It is a scary thing to play god"

I've been thinking about this for quite awhile (even now!), and I have to say everyone has put up excellent points!

ReplyDeleteWell, I can say that technology reflects our character, and moral choices start right from the get go...from the blueprint to out of the factory. Our designs set the robot's foundational character, but all bets are off on how the robot develops after that. I do believe having a override function/mass shut down button is essential as an option to stop any dramatic build up of anti-human sentiment.

The closest analogy I can think of to creating robots is having children, there's always a chance that they'll kill or rebel against you, but the difference is that children are similar to us. As much as we can try, we have to trust robots (if they're capable of independent thought) to make the right choices, and be ready to shut them down immediately if things get out of hand.

I don't feel that I am not providing a very comprehensive answer to this robot/human conflict, and I guess we don't have such robots yet and it will take interaction with such robots to come to more complete conclusions.

Also, I have found a couple of interesting articles that talk more about A.I. and robots in combat and I will post them up real soon!